Clearing Away The Less Technical Questions

Linked Read Technologies & PacBio

Personally, I wouldn't really consider 10x or IGenomX as much as a threat if for instance, Pacbio reduces their cost as they hope to by loading more ZMWs. And these technologies would perform better on Pacbio over Illumina data.Yes, PacBio is continuing to drive up the yield from flowcells, and therefore drive down the cost. They are still a ways from the cost per basepair of the low end Illumina boxes, I think (haven't done the calculation recently), and that means they are far from the cost on the big HiSeqs. Illumina sometimes isn't shy about their margins, so if PacBio started getting close the goal line might move.

I've sometimes wondered about running the linked read technologies on PacBio, but I think the gains are limited. It would be a way to get around the read length challenges on PacBio, but it would probably require a lot of tuning to get the longer fragment sizes that would make it worth it.

Publication Growth for PacBio and ONT

One thing that PacBio has in their favor is the number of papers that are being published using their technology. That's an early indication of a good technology.Papers are an important measure, but PacBio has at least a two year lead in this department. My first access to PacBio data was in Spring 2012, which was around the time the first commercial provider was firing up their machine. A few labs had them even earlier; it looks from PacBio's publications list (which has a nice year filter & includes preprints) that publications really started going with 4 in 2010 followed by 9 in 2011, 7 in 2012 and then climbing to 37 in 2013, 292 in 2014 and 586 in 2015. Curiously, there have been only 426 so far this year, which would reflect a plateauing I don't believe. My hunch is that this represents a curation lag; my inclination is that PacBio usage is still growing.

Oxford doesn't have such a nice filter for their publication list, alas. But we do know that the MinION didn't launch until mid-2014, and those early flowcells were plagued with instability during shipping and other chemistry issues. I counted 74 publications on their list; PubMed turns up about 50 of these with "minion AND (nanopore OR sequencing)" [with some manual filtering; the raw search gets some papers by an author named Minion from long before the sequencing era]. Based on that PubMed search (and hoping what it doesn't pick up is nearly entirely bioXiv preprints). I believe 2014 had only 3 publications: the horrible hatchet job, the PoreTools publication and a first microbial genome sequencing. The PubMed search suggests there were 22 publications on MinION in 2015 (note that I am going by publication date; tracking when pre-prints or advanced access appeared is a beyond the scope of this very crude analysis). So there should be about 50 publications or preprints in 2016. This is very rapid uptake of the technology, at least on par with PacBio and perhaps a bit ahead (though again, I wouldn't make a claim there without doing a much more in depth analysis).

Data Release & Transparency

In a field that relies on openness and transparency, I guess ONT's restrictions on who can get their pilot minions do not reflect as well.As noted by another commenter, Oxford ended the MAP early access program and will now sell the sequencers broadly, though not to direct competitors. The only data restrictions Oxford ever placed was that MAP participants were not supposed to publicly discuss their lambda phage burn-in runs. Otherwise, many MinION users have been very free with data, and the MARC (MinION Analysis and Reference Consortium) has many public datasets online.

For instance, ONT announced on 20th October that they had sequenced the human genome on a minion, but no data was released and still hasn't been released to my knowledge... It appears to fit a pattern of a lack of transparency at least for a novice observer like me.I'd ascribe this more to trying to rush out an announcement before being quite organized on the data release, but I tend to be generous with most people in such matters. I don't see a pattern of a lack of transparency, more a consistent public declaration of difficult milestones that are rarely reached on time.

An Ambiguous Point on Money

While one would expect nanopore tech to be eventually successful, one would be equally worried if they put all their money into Oxford Nanopore i think.This statement is a bit enigmatic as to what type of money putting you are discussing. If you mean in the investment world (and my giving of investment advice is strictly limited to urging everyone read A Random Walk Down Wall Street), one can't invest directly in Oxford Nanopore, as it is a private company. Pacific Biosciences is, of course, publicly listed. If you mean in the research space, MinION is a low investment, the first sequencer since radioactive Sanger for which that statement can be made. Oxford sells the devices for $1K, but that package contains about $1K in consumables.

The Fun Stuff: Homopolymers.

Now, where I can go into excruciating technical detail is on Mohan's reaction to my optimism that Oxford wouldn't be hurt by being restricted to 1D reads and that the homopolymer issues are solvable.Regarding nanopore technologies, you mention the usual systematic homopolymer error, has there been any public research suggesting that this can improve ? This is a problem that all nanopore based techs have and have not solved for a while. The only direction appears to be searching for new pores and that appears to have only swapped one systematic error for another unless i am mistaken.

I am curious to know what makes you optimistic that they can reduce this error, that would be very interesting. It's a long time waiting, much (?) longer than PacBio took to catch up with its earlier promises esp considering the rewards up for grabs.

Also in terms of just using 1D, isn't that going to make it in general less reliable over using 2D ? I guess CCS is definitely a special feature for PacBio, enabling the same base to be read etc so it's not surprising they are defending it.

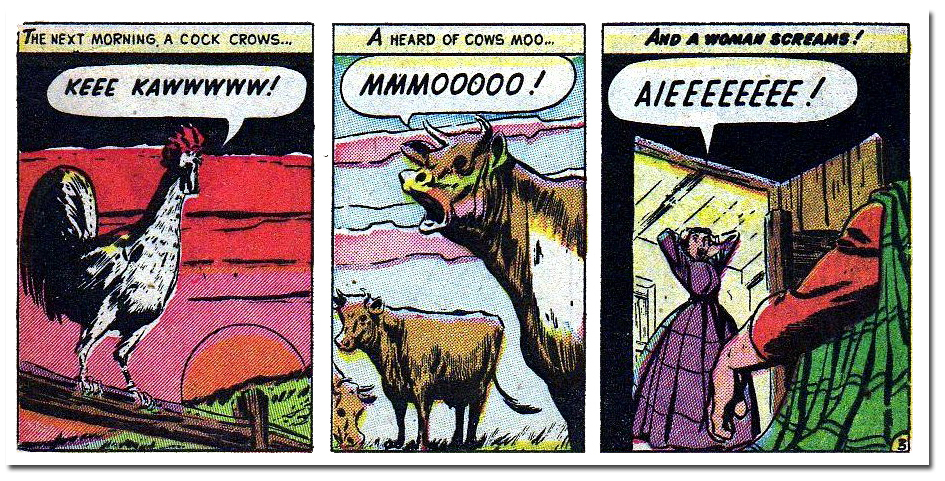

I had some fun this afternoon finding ordinary world examples of homopolymers (which, like the case of "palindrome", the word describing an orthographic oddity lacks the oddity) . I can't think of a case of longer than two in the English language, but there are many. A rite of passage growing up was learning to spell Mississippi correctly, which even has a triletter repeat as well. Anna's llamas & aardvarks quaff oolong incessantly. The only longer cases I could think of (Wikipedia has a list of triples; they are usually broken with a hyphen) are in onomatopoeia and interjections, or when a particular style of speaking (one that dogged me as a youth) is being portrayed. The snake in Harry Potter and the Philosopher's Stone: "Thanksss amigo!"

I found this gem via Google in a blog on comic books:

But in DNA sequences such homopolymers are rampant, and knowing their precise length is often critical. Terminator chemistries such as Illumina handle typical repeats well, though I could imagine very long ones might trip up some of the polymerization steps. Non-terminator chemistries often have trouble with the signal from a homopolymer repeat not being precisely linear to the length of the repeat. Ion Torrent, 454, PacBio and MinION have become somewhat known for homopolymer errors.

We saw this in some of our first Illumina datasets at Starbase, as we sequenced some biosynthetic clusters of interest that had been previously published. Since Streptomyces and there kin are extremely G+C rich, they often have runs of 6-10 G or C (longer is relatively rare), which can be trouble. Not just for modern chemistries; one cluster sequenced long ago with Sanger chemistry was full of microframeshifts. Get two in close proximity which balance each other and a gross frameshift is avoided. There is one paper in the literature that spent a paragraph or two trying to rationalize why one enzyme in a family is missing an otherwise universally conserved residue which the author's crystal structure says is critical. Alas, they apparently never contemplated the correct answer: there's a set of microframeshifts which corrupt the ORF translation at precisely that spot.

Our second set of PacBio datasets were plagued with this issue; they solved the large repeat assembly problem beautifully, but left lots of frameshifts. In case you are wondering, our first datasets both was used only in hybrid mode - PacBio-only assembly software didn't exist yet - and were too short to solve our repeat problem. PacBio made huge strides in library preparation between spring 2012 (1st dataset) and spring 2013 (2nd dataset). Some of the later chemistries that improved read length actually caused worse homopolymer problems, even after polishing. I am awaiting a new dataset to assess.

Okay then, why am I optimistic this can be solved on MinION? Why do I see 1D as not being a huge hindrance. It falls into 5 categories: limited application sensitivity, software fixes, protein engineering fixes, other library strategies and finally a biochemical fix using base analogs.

Many Applications Have Little or No Sensitivity to Homopolymer Errors

The first reason I am bullish is just from looking at application space. We can score any given sequencing application by the degree of sensitivity to homopolymer errors in general. The lists below are obviously not comprehensive, and one could argue many of these.A huge number of potential applications for MinION are effectively insensitive to sequence quality, so long as that quality exceeds a reasonable threshold -- and the current 90% for 1D is probably enough in most if not all cases. This would include detecting designed tags, hybrid assembly, copy number analysis, resolving combinatorial DNA library configurations and validating the identity of strains.

In the small impact category I'd place resolving full length mRNA structures and resolving complex genome repeats. You'll get a lot of information from noisy reads, but you won't be able to get the whole enchilada. So if you are doing full length RNA-Seq on a known organism, you won't care, but if the RNA-Seq is your way to assess the protein coding potential of some previously unsequenced organism, it may be a hindrance. Similarly, many repeats will be resolvable even if homopolymers aren't quite right. But if you really need to measure the length of a homopolymer, then that won't work.

In the often, but not always, impactful category I'd put a lot of variant analyses. Solo de novo assembly is clearly in this category.

One could, if desired, go through each nanopore and SMRT publication and score it by these criteria. My guess is that you would find the MinION group somewhat weighted towards the "no impact category". But for the middle category, then other considerations would probably decide a decision. Is extreme speed valuable? Is portability valuable? Selective sequencing? Having personal control over timing? If yes to any of those, then one might sacrifice a bit of homopolymer resolution to gain one or more of those attributes. And should Oxford improve accuracy, then the edge for PacBio becomes fuzzier.

Software: One Place Where Big Data Might Play Well

A big reason I am bullish that the homopolymer problem is fixable is that this should be an ideal application for "big data" analyses. Oxford's raw signal is very rich, and the current callers are certainly not taking advantage of all the complexity of the signal. A flaw in many attempts to apply "big data" approaches to biology are sparse datasets; noise is much easier to find in these than signal. But with MinION, or any other high throughput sequencing approach, generating scads of data is child's play. Now, I must stress my view that there is good data for this and much better data. If I were in charge of solving the homopolymer problem, I'd be having specialized test articles constructed for this, a concept I've floated before. I'd be building molecules that were rich different homopolymers in different contexts, with all the articles sequenced using high quality (i.e. Illumina) chemistry to validate the designs.For $10K-$20K at current DNA synthesis rates and Illumina costs, one could build 100 kilobases or so of carefully designed DNA to try all these out. A quick back-of-envelope calculation suggests this would be in the right ballpark, or perhaps overkill, to represent all possible homopolymers in the form (#N{5,10}#), where # represents every possible 5-mer. Note: please, please check my math here!! -- 6 homopolymer lengths x 4 different bases for the homopolymer x 2^10 possible combinations of # and # = 98,304 -- but that must be off by a factor of 2 because of double strand complementarity so 50 kilobases. Note also that the long G and C runs, which are most important to me, may be difficult to synthesize as long G runs can form quartet structures that interfere with different steps of the gene synthesis process.

Get a 10 gigabase run of that dataset, and you are talking 100,000 fold mean coverage of the sequence. That's a lot of data for analysis with big algorithms, lending a good chance of teasing out any structure to the data that can be used to improve the homopolymer calls.

Protein Engineering Routes

Nearly every sequencing company these days has a major effort in protein engineering. Nature has given us amazing starting material, but rarely are the proteins truly ideal for these artificial applications (rarely being perhaps too cautious; probably never). Tolerance to modified nucleotides for labeled and/or terminator chemistries. Ion Torrent has enzymes with better homopolymer performance; PacBio ones with heightened resistance to photodamage. Engineering has probably been at least contemplated on every enzyme that is used in any associated method, from library preparation through template production to the actual sequencing operations.

Oxford's R9 chemistry was only released early this summer, and already Oxford has released an upgrade to the chemistry called R9.4 which includes (from Oxford's Wafer Thin Update) incorporates new engineered versions of both the pore and the motor protein. I'd expect further tuning by this route. In particular, the more metronomic the motor protein, the better the chance to resolve homopolymers by using the duration of the events as evidence for the underlying length.

Protein engineering campaigns are most practical when improved performance can be read out directly. While it isn't quite the same as a selection, I can imagine in the abstract (though not quite in believable detail) how one could generate libraries of mutant pores and divide the clones into pools. Each pool could be expressed and put on a membrane in a flowcell, then used to sequence standard test articles. Pool showing pores with better performance could then be progressively subdivided (a technique known as sib selection) until a single winner pore is identified.

Alternative Library Strategies

There are at least two other ways to generate sequencing libraries that should fall outside the PacBio patent (though that doesn't mean someone else's patent doesn't apply) that could be options as well. Both of these involve circularlizing the original DNA molecule and performing additional biochemistry; whether this extra hassle is desirable would be up to the individual scientist to decide.

In INC-Seq, described for nanopore sequencing in a pre-print, circularized templates are replicated using a rolling circle mechanism, creating a new linear molecule which repeats the original template multiple times. By sequencing through the INC-Seq array, parsing into repeat elements at the linkers and then generating a consensus, a high quality consensus can be generated. Again, I haven't explored the patent literature around this but the rolling circle mechanism has been understood for a long time, and been used to generate sequencing templates for a long time.

In circle sequencing, which has been used with Illumina sequencing, a random barcode is incorporated in the circularization linker. After circularization, the template is amplified by high fidelity PCR. Combining reads with the same barcode enables computing a consensus.

For PCR-based methods, a similar strategy is to incorporate a random barcode into the first round of amplification; each input molecule gets its own code. Amplification with non-barcoded primers then generates a pool in which each input molecule is represented multiple times. By combining all reads with the same input molecule barcode, a high quality consensus can be constructed.

I won't claim this is a comprehensive survey of such approaches, or that more aren't hatching in the minds of clever scientists. The hairpin strategy claimed by PacBio is far from being the only way to read the same molecule multiple times.

Base Analogs

Finally, particularly for certain applications, there is an obvious strategy that could be tried, though actually implementing it would require a lot of calibration work - -but then again, that's what those test articles are for! In particular I am thinking of the case in which one is detecting variants by PCR. The thing to remember about nanopore sequencing, and can be either a feature or a bug, is that any change in the nucleotides (well, perhaps excepting isotopes) seems to alter the signal coming from the nanopore. There is a rich literature for using various base analogs to improve PCR of difficult templates, backed up by an older literature of using analogs to solve difficult Sanger templates. Suppose PCR were conducted using a mix incorporating 50:50 ratios of each base with an analog (e.g. methyl-A, methyl-C, deaza guanine, uridine [though uridine kills polymerases of archeal origin] or 2-thiothymidine). In this case, few sequenced molecules would have a true homopolymer; homopolymers in input DNA would nearly always end up as a mixture of the actual base and the analog. This would again require a lot of tuning of the basecalling software to deal with all these odd bases in the mix, but that's why those calibration test article DNAs are so valuable.Concluding Thoughts

I first showed that many nanopore applications are insensitive to homopolymer errors, while some have modest or strong sensitivity. I've presented a number of possible solutions to the homopolymer problem, some more speculative than others. There is no guarantee this can be solved, but the existence of multiple strategies raises the odds that at least one of them will work, at least in some situations. Come back in a year or two and you can whether I am or am not completely wrong.

6 comments:

Hi Keith,

Thanks a lot for that ! It's amazing that you took the time to explain stuff and share some of your knowledge. I definitely learnt a few things there.

On the systemic homopolymer error, I now realize i was confused when I first asked about it. Now I understand the homopolymer error better , essentially if AA and AAA generate the same amplitude, it's difficult to know how many As there are and as a result, this results in indel errors. But some of this can be corrected by reading multiple times and through your potential solutions/tradeoffs.

That made me realize I also had a question on simple systemic error.

Nanopore tech relies on measuring the amplitude of current, I think ONT's case, it's measured for 3 nucleotides(?) at a time. if we have {YYY} as a triplet, then there are 64 (4^3) possibilities. In order to get it right, you need to have 64 different amplitudes. However, if there are less than 64 detectable levels, this will generate a systemic error which can't be recovered no matter how many times this is read.

https://www.ncbi.nlm.nih.gov/pubmed/23744714 looks interesting in that regard - You can skip to section 3.1 for an interesting explanation. I guess how good ONT's ASIC is in differentiating the amplitudes would tell us how low the systemic threshold can be. As a high systemic threshold can't be resolved by reading multiple times, the electronics has to close this gap. I guess they are always improving. Would you know where they stand on this, for eg a map of nucleotides to amplitudes that shows improvement etc?

I fully agree with you that portability, speed, selective sequencing etc are valued and therefore one can substitute some of the current NGS methods with ONT if they can sacrifice some other functionality. I also agree that was probably harsh to generalize ONT's behaviour, I guess it's more like promising too much too early as you mentioned.

In any case, the area is changing rapidly, companies like twoporeguys probably would take some of ONT's cake as ONT takes PacBio's and PacBio eats some of Illumina's :)

On your other points, for what it's worth, Dovetail offers Pacbio now and Pacbio reports their total no of papers > 1800.

Mohan,

My pleasure.

Looking at Dovetail's website, they are using PacBio in their genome sequencing service, but I read it as they first build a genome using conventional technology and then upgrade it with Chicago sequencing. It doesn't specify, but I'd be stunned if they ran this anywhere other than Illumina -- the technique wants lots of events (you are building a statistical model of what is near what using counts; big counts for smaller error and higher resolution) & getting long reads has no obvious benefit here.

WRT base calling on Oxford, you've touched on the fundamental challenge, even in the simpler space of assuming the sequence has only 4 bases (as opposed to more due to biologically modified bases and DNA damage). Even if nature/engineering gave you the perfect pore with 64 distinct signal levels for the 64 different trinucleotides (which is beyond unlikely), noise in the system will make those signal levels overlapping in real life. So looking at the raw signal will give an ambiguous result. But you can use adjacent information to help resolve this - trinucleotide N+1 must be one that with 2-base overlaps of trinucleotide N and trinucleotide N+2. So the HMM and RNN models (and whatever else anyone cooks up) fit the series of events to a model of the sequence. The sampling rate of the electronics exceeds the pace of the motor by several fold, so a model could also attempt to extract information from the transitions. And while most of the signal is from a trinucleotide, more distant sequences will shift it. In other words, the DNA passing through the pore is subject to many influences which are convolved into the output electrical signal.

One of the advantages to any "2D" technique (the one PacBio is claiming or the other options I outlined or even post-alignment polishing perhaps) that reads both strands is that the particular oddities will probably not be symmetrical, so by combining information one has a much richer dataset which can be resolved into a single model.

New players are always welcome; Genia is a more near-term threat to both companies but others such as TwoPoreGuys might make inroads -- but we can't really assess that until we start seeing some actual data, can we? And better doesn't always win -- just ask the Betamax team at Sony. I'm not saying the others won't win, but the market has its fair share of random noise as well

Pacbio is loosing 15-20M $ per quarter, Illumina is still alive and there are so many competitors.´Reducing the whole story to these two tiny companies may be a huge mistake. On the other hand the "agent of change" seems to be really sure about the future of his company (and so arrogant). 1.5-2 years to see the end of the story?

"1.5-2 years to see the end of the story?"

People have been saying that for 9 years already.

Regarding base analogs - the compute load increases exponentially with the size of the nucleotide dataset. So the 50:50 mix proposed above would probably make their current base caller difficult to implement locally (they still need to run 2D basecalling in the cloud due to computation load). This does not mean that a few years from now better methods would not be available.

On the other hand I am not sure that the readout speed of the electronics has as much headroom as indicated here. Reading out 10-20pA at 10KHz rates is difficult enough. Doing so with 0.5pA resolution increases the challenge, and the difference between some k-mers is likely on the order of 0.1pA (I believe ONT has claimed either 4-mers or 5-mers as their base set). At 500bps the transiebt signal between two neighboring nucleotides is ~0.2ms (assuming 10% of the time is a transition, likely a VERY generous assumption). In order to capture that adequately you would need 10x over sampling which gives you AT BEST a 20us integration time to work with. There is no way that I know of to measure with 0.1pA accuracy at 50KHz.

My biggest problem with ONT is that they grossly exaggerate their ability to deliver on the schedules they advertise. They either know that they are making misleading promises or need to get better planning. This has been true about EVERY announcement they have made since 2012 so it is not unreasonable to assume that they are intentionally misleading. Some may not want to call this lying, but at its core it essentially is. The latest example is their million pore thing that they discussed in the latest update. The statement was "this is at least two years away so don't wait for it". How about "at least 5 years if we stop work on anything else and likely 10 if we do it while improving and further developing our current product line and it will take another $200M+ to turn into a product". Sure, they need to raise money so a certain degree of hype is unavoidable. But I can't think of any other company that I pay attention to which is as liberal with facts.

They have what looks to be good technology and a decent shot at being successful in the marketplace. The CTO wouldn't to just shut up and focus on building a great product. This is what PacBio did under Hunkapiller and is the only reason they are still around. If they had kept bragging how they would wipe their arses with Illumina stock certificates, as they used to and as ONT's CTO claims today, they would have been out of business circa 2013.

"Regarding base analogs - the compute load increases exponentially with the size of the nucleotide dataset. So the 50:50 mix proposed above would proba.."

none of what you say here applies to their latest base caller which isn't hmm based and the concept of a kmer is somewhat abstract. the notional kmers overlap by 1 base in any case so you can afford to miss a few. As their users have shown in public analogues can be detected very well in signal both single molecule and consensus.

Given how ambitious what they are doing is, some timing difficulties can be expected. Their customers dont seem to mind. What they have done successfully is get customers used to ongoing updates, both technical and time wise, and to a rapid rate of innovation, more like an agile open software project. That is very different from the traditional 'ABI' school.

I dont recall seeing any bragging about wiping arses with illumina stock? where was that ?

Post a Comment